Debugging a CPU

This is the story of finding a bug in the ZipCPU.

It starts much like any other FPGA story: somewhere else.

|

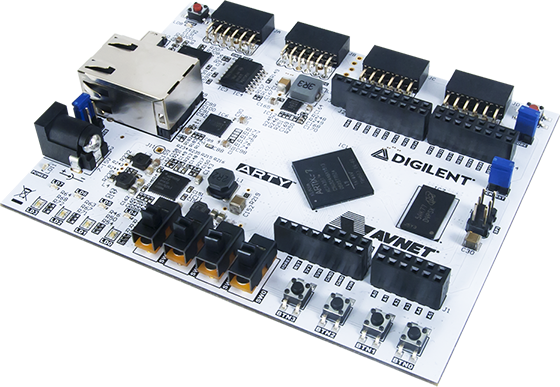

Some time ago, Digilent replaced the flash chip within their Arty FPGA board. They also created a line of new FPGA boards, so my Arty board has now been rebranded as the Arty A7. I never realized there was a difference until someone wrote to tell me the design didn’t work anymore. With a little bit of digging, he and I discovered that the flash chip had changed. The new flash chip wasn’t just another chip from the same vendor, it was now from a different vendor entirely: from Micron to Spansion.

This broke my old flash controller.

Not a problem, I thought to my self, I was hoping to write a blog article on how to build a Universal Quad SPI flash controller. This new controller needed to be tested and proven in real hardware. Let me just place this universal controller into my OpenArty design and then all shall be well. Indeed, all shall be better: my universal Quad SPI flash controller runs at twice the speed. This will be a nice upgrade!

But what about the differences between the two flash chips? I can use AutoFPGA to help me select between configurations of this universal flash controller.

|

The only problem is that the OpenArty design wasn’t an AutoFPGA design to begin with. Ok, that’s not really a problem, we can update the OpenArty design so that it uses AutoFPGA. That will make it even easier to test my HyperRAM controller as part of my OpenArty project as well.

The next problem is that the original OpenArty design requires an external serial port in addition to the one on the board. This may have kept folks from using the design, and so my newer designs have been multiplexing a console port onto the serial debugging port. This is a fairly simple upgrade, so we can do that too.

Further, when I was struggling to get the flash controller working, I had just had a bad experience with the ZipCPU on the iCE40s that had forced me to change my default linker script(s). After a quick upgrade to AutoFPGA, it now supports multiple custom linker scripts, but that now meant that the bootloader needed to change as well.

Other things had changed and needed to be updated as well. For example, the ZipCPU had now been formally verified. I found a lot of bugs in that process some time ago, and so I was excited to be updating the design with the new formally verified CPU.

In the middle of this, I chose to switch to Vivado 2018.3 from Vivado 2016.3.

Is this starting to sound all too familiar?

Years ago, when I studied Software Engineering, we would call each of these a “Small matter of Programming” or SMOP. This is said in a tongue-in-cheek fashion, however, because nothing is truly ever a “Small matter of Programming”. As an engineer I also tend to (inappropriately) judge each of these changes to be small and simple matters.

The result was that, with so many changes, I was threatening to violate the fundamental assumption of all debugging: “There’s only ever one bug in the design.” Sure, I know, that gets violated all the time, but think about it: most debugging methodologies can only handle one bug at a time.

So, today’s blog post is about the “final” bug in this process, and one I certainly wasn’t expecting.

Background

We’ll pick up the story from the point where I was working on my new flash controller. In my mind, this was the only big thing that had changed in this design:

-

The CPU hadn’t significantly changed

-

It now passed formal verification in many different configurations.

This verified instructions up to the input of the ALU. All instructions were known to be properly performed, the pipeline timing was known to not drop or skip instructions, and for not inserting instructions. Further, the difficult part of making sure memory instructions were not issued unless I was willing to commit to them had also been verified.

-

I had just recently found and fixed the bug that had prevented the CPU from starting at an arbitrary address. The loader could now load a program and start it from any address–not just the reset address.

-

The ZipCPU simulator is supposed to be able to load any program into the ZipCPU, bypassing the loader. This is primarily useful if you want to bypass writing to the flash via the flash driver using the cycle-accurate flash simulator, when you didn’t expect a bug in the flash interaction. Why wait to program the flash? The only problem was that this simulation loader had a bug in it that kept it from starting ZipCPU at any address, restricting it to starting from the reset address only.

This bug had now been found and fixed, and the “WARNING” statement indicating the presence of the bug has been removed.

-

The CPU now has a new data cache.

This one I knew worked. I’d also formally verified it, and ran it through simulations, so I could be confident that this worked. Or … could I?

I knew the CPU was ready. That was the easy part.

The AutoFPGA

updates, those were a bit harder. I had now switched conventions

regarding how I referenced peripherals. All peripherals at fixed addresses

now had names starting with an underscore like _flash, _sdram, _clrleds,

and so forth. These all had to be changed, and so the

OpenArty ZipCPU

software all

needed to be adjusted.

I also wanted to bring up the

Arty

with fewer capabilities–just to work one by one through them. This meant a

limited AutoFPGA

build, but it also broke many of the programs that now depended upon

non-existant hardware registers–since they weren’t part of the limited

build. This was easily fixed through the per-component #define macros

provided by

AutoFPGA, but

it still required software to be updated to use those macros.

That brought me to the new flash controller. I knew this flash controller was ready, but not yet hardware proven. That was okay, since I was going to use this opportunity to verify my new universal QSPI flash controller worked on real hardware, to finish testing the driver for it and to blog about it next. I just needed to get it working–at 2x the clock and data speeds. I expected this to take a bit of work.

Indeed, the flash

controller

took a lot of work. I struggled to read and understand the timing of

both Xilinx’s IDDR and ODDR

modules.

I was struggling with things like the

flash writing properly, but not

reading properly. Or, perhaps worse, it would read mostly properly. This

killed my

loader,

since I would write the ZipCPU

program to the flash, and then

attempt to read my data back in order to verify

it was correctly written. If the reads were only somewhat reliable, then my

flash driver

would always try to reprogram the

flash.

This reprogramming happened nearly all the time, even when reloading the same

program with the same instructions, indicating that something was broken.

This also left me wondering if the CPU was reading the right program from the flash.

For a while, I went forward with this “broken” flash controller anyway.

I got as far as the demonstration program that is designed to demonstrate the Arty’s 100MHz MII ethernet port. This software handles ARP transactions, while sending pings to a nearby host. It’s also supposed to be able to respond to a ping request. The program was failing. For some reason it was locking up mid-design.

I figured it must be a problem with my new flash controller. Perhaps the CPU was misreading its instructions from the flash.

While this post isn’t about the bug in the flash controller, that can wait for a later post, it is important that you understand what was going on because it sets the background for what follows. Basically, I was able to write anything I wanted to the flash without any problems, but reads were unreliable. It might be that they were 99.99% reliable and then there would be a bit flipped. I figured this could only be a timing problem, and that I was going to need to write and blog about how to build a synchronization circuit.

In other words, when the network program failed, I had no idea where within the design to look for the problem.

MAX BAXTER from twitter suggested

I change the drive strength on the

flash. The

Micron

flash chip has several

control registers, among them are the Extended Volatile configuration register

and the Extended Non-Volatile configuration register. Both of these registers

can be used to adjust the drive strength in Ohms. (Drive strength? Shouldn’t

the units of drive strength be Amps and not Ohms?) The first adjustment I

tried worked, and so my flash

controller,

was finally working!

So the network ping program should too, right?

Wrong.

Okay, that was my last working hypothesis. Now what’s wrong?

Symptoms

So let’s start by taking stock of this situation. My goal at this point is to verify that the MII Ethernet controller was fully functional, even after redesigning the top level of the project to use AutoFPGA.

-

My new flash controller now worked. It could read and write the flash at an SPI clock rate twice what it was before, and now equal to the system clock rate. If I wrote a design to the flash at this point, I could read it back at any time to verify the correct design had been written.

There were now no more differences between the data I wrote to the flash and the data I read back out, so I figured there were no more bugs in the flash controller.

|

-

My goal was to test whether or not I could send and receive packets via the Ethernet port.

I have a “manual” approach to sending a packet and receiving a packet. This doesn’t use the ZipCPU at all, but rather controls the networking device through the debugging port. I could even do this via a shell script if I wanted–only it’s harder to calculate/verify a CRC from a shell script. Indeed, this manual ping program was how I originally built and tested the network controller.

This manual approach isn’t very useful for debugging a ping interaction, though, since a proper ping exchange requires an ARP request, then an ARP response, followed by sending the ping packet itself, as shown in Fig 3 on the right. The manual approach sends one packet and then waits until the first response is received. This isn’t enough to get a ping response from my host machine.

What I really wanted to know, was whether the ping response was received from my host machine. Once my desktop/host receives the ping request from my Arty board, it then needs to send an ARP request to my board, get an ARP response, and then finally return the ping.

That’s a lot of network transactions that are required just to implement a ping.

The good news is that you can debug what goes over the channel using Wireshark. Using Wireshark, I discovered …

-

That the network wasn’t working at all initially. I traced this down to a failure to send the reference clock to the PHY chip in the Arty.

-

That the MAC address from my device was being left at zero. I traced this down to what might be a compiler issue. (I’ll need to come back to this, as it’s been “fixed” but hasn’t been fully chased down.)

-

That my host computer is sending ARP responses, but that they aren’t getting properly received.

These could all be fixed fairly easily, once I realized what was taking place. Fixing these issues got me further along, just not quite there.

-

-

Eventually, the ZipCPU system hangs and stops running.

I could tell this by using global variables.

As you may recall, I can read and write any value within my design using

wbregs. Thewbregsused by the OpenArty distribution can accept an optional parameter telling it where to find a map file defining where all the variables are in memory. The map file itself is generated by the linker, so there’s no extra work in that part. As an example, I can read the variableuser_heartbeatsby running,

% wbregs -m simple_ping.map user_heartbeatsI knew my program had stopped once this number had stopped incrementing. This is also how I can (somewhat) tell what’s going on within my design even while a program is running, without needing to stop it.

-

When the ZipCPU hung, the debugger also failed. Indeed, if you bring up the debugger, it hangs waiting for the ZipCPU to respond to it.

This is disappointing. The CPU isn’t supposed to work like that.

During this time, I can reset the CPU to get into the debugger, but doing so causes the system flags and the program counter to get reset. Hence, I can’t tell if the CPU was in supervisor mode or user mode when it crashed, nor if it was in supervisor mode could I tell what the program counter was set to.

Looking at the user space program counter suggested that the user space program always stopped at a compressed instruction. Had I looked closer, I might have noticed that it wasn’t always stopping at the same compressed instruction and realized the bug.

I wrote this evidence off as not clear enough to use.

Ever been in this situation?

Now let me ask, how would you approach debugging this issue?

Examining the tools

Let’s take a quick look at the tools we have available to work with to find this bug.

-

Often the first method I turn to when I have a bug like this is “Voodoo computing.”

Defn: Voodoo Computing

To change what isn’t broken, in an effort to fix what is

In good voodoo programming fashion, I disabled the data cache.

It didn’t help.

I tried playing with the network control port.

It didn’t help either.

In many ways, most of the on-line forum requests I find, on either Digilent’s or Xilinx’s site, are Voodoo computing requests. “I don’t know what’s going on, so I changed something I didn’t understand, and now my design still doesn’t work.”

Sure, “Voodoo computing” feels good, but it rarely leads you any closer to understanding what is going on within the design. On the other hand, your likelihood of success with Voodoo computing is still better than your likelihood of winning the lottery.

-

The other end of the spectrum of bug-fixing approaches is to guarantee that you never have the bug in the first place. That’s the purpose of both formal verification and simulation.

My CPU passed both formal verification and simulation, but was still failing in this instance.

Now what? I went back and double checked the proof of the CPU and the network control interface. They still passed.

While you might be able to simulate a commercial CPU, it is unlikely that you’ll ever be able to get access to the code or formal properties necessary to formally verify one.

-

As I mentioned above, the ZipCPU debugger fails

Normally, I’d try to see what’s going on within the debugger. However, a failing debugger doesn’t help much.

Most commercial CPU’s have a debugger you can use. Sometimes it helps. Often, as in this case, it doesn’t since few debuggers can examine a program struggling with interrupt and non-interrupt contexts.

-

My simple_ping program contains a variety of global variables.

unsigned pkts_received = 0, replies_received=0, arp_requests_received=0,

arp_pkt_count =0, arp_pkt_invalid =0,

arp_missed_ip = 0, arp_non_broadcast = 0,

ip_pkts_received = 0, ip_pkts_invalid = 0,

icmp_echo_requests=0, icmp_invalid= 0,

ping_reply_address_not_found = 0, ping_replies_sent = 0,

ping_reply_err = 0, user_tx_packets = 0,

user_heartbeats = 0;I do this on purpose. Each of these particular variables is a counter of some type. I can then use them to see if or when things are happening with the CPU or not.

By compiling my simple_ping

program with the linker flag, -Wl,-Map=simple_ping.map, the linker will

then create a file for me containing the final addresses of all these

global variables. Indeed,

this is the very reason why they are

global and not

local

variables: so that they will have a fixed address in memory.

If you scroll through this map file, you’ll find lines looking like,

.bss 0x000000000700c490 0x68 obj-zip/simple_ping.o

0x000000000700c490 gbl_picv

0x000000000700c494 subbeats

0x000000000700c498 heartbeats

0x000000000700c49c pkt_id

0x000000000700c4a0 router_mac_addr

0x000000000700c4a8 ping_rx_count

0x000000000700c4ac ping_tx_count

0x000000000700c4b0 ping_mac_addr

0x000000000700c4b8 user_heartbeats

0x000000000700c4bc user_tx_packets

0x000000000700c4c0 ping_reply_err

0x000000000700c4c4 ping_replies_sentThese lines are created as the linker places the simple_ping object file into my executable. At that time, these variables can be found within the BSS segment. Once these values are placed within the final executable, their addresses are then given on the left hand side, with the variables names on the right.

Originally, I would read values from this list by looking up their addresses

and then reading them with my wbregs

command.

This command implements a basic peek/poke

capability

within the design. The result might look something like:

% ./wbregs 0x0700c498

0700c498 ( (null)) : [....] 00000000This would tell me that the CPU

had never gotten to the heartbeats++ statement, and so I could

track down what was taking place across interrupts even–without

needing to disturb the running CPU.

In this case, I knew the CPU was failing. These global variables gave me a good idea of where it was failing. I just had no idea why it was failing.

This approach would work well with any CPU, commercial or otherwise, as long as your design has some form of debugging bus within it.

-

BREAK statements

The ZipCPU supports a break instruction,

BREAK. ThisBREAKinstruction takes an optional immediate value, although it doesn’t do anything different between one immediate value and any other. When the CPU encounters aBREAKinstruction in supervisor mode, all processing halts and waits for the debugger to rescue it. If the CPU encounters aBREAKinstruction in user mode, you can choose to have the statement halt the CPU (the default), or just to return to supervisor mode.A

BREAKinstruction can be added to any program by just calling thezip_break()function from your C/C++ source code. This function call is treated specially by the ZipCPU GCC back end, which then places aBREAKinstruction at that point in the instruction stream.Fig 4. Steps to Executing a Break-point My intent has always been to integrate this statement into the debugger, so that the debugger can create break points to help debug a program. The debugger could then replace any user instruction with a

BREAKinstruction to create a breakpoint. In order to continue, the CPU would then return theBREAKinstruction to its original value and then step forward by one instruction. It could then replace theBREAKinstruction so as to re-enable the breakpoint the next time it is seen. This is still on my to do list, however.Even without break-point support in the debugger, break points have been one of my “go-to” approaches to debugging. If you know the CPU hangs, you can often bisect your code with

BREAKstatements to slowly figure out where the CPU hangs. If it hits the breakpoint, then the hang must’ve occurred after that instruction.Because the break points are not (yet) supported by the debugger, though, you currently need to remove the

BREAKinstruction by recompiling your program and reloading it. It’s not pretty, but it works.In this case, the

BREAKinstruction stopped the CPU long before the problem. So, it didn’t help (much).Many commercial CPUs have break point support as well. This support is usually integrated into the debuggers, and unavailable from the compiler. However, debuggers in general tend to struggle to handle designs with interrupt contexts.

-

LEDs

We’ve discussed using LEDs for debugging before. The CPU can set the LEDs to any particular value of interest, and you can then use the value of the LED register once the CPU hangs to figure out where the CPU hung, or in what kind of state it was in. This is much like the global variables approach listed earlier, save that LEDs can be used without the debugging bus.

In one example, I set an LED on entering an interrupt routine and cleared it on leaving the routine. Seeing a dimly lit LED was then my indication the design was still running. In that example, when/if the design halted, I could clearly see what state the CPU was in at the time.

For this particular design, the LEDs got me closer. Indeed, I was able to bisect the code down to the loop where it failed. It just wasn’t close enough for me to figure out where the bug was coming from.

This approach would also work nicely when debugging with any commercial CPU

-

I’ve often been successful debugging a design by using a watchdog timer. My watchdog timer is essentially identical to the ZipTimer we’ve discussed earlier, with one critical exception: when the timer goes off, the CPU is reset. You can then create a special bootloader to learn something about what happened.

The trick to using the watchdog timer is to sprinkle your code with commands to set the count-down timer to some number greater than zero. Once the timer reaches zero, the CPU resets. In my case, this meant that the CPU would halt, since I had it configured to halt on reset.

This approach may have been most useful to me when debugging my S6SoC design, where I couldn’t fit the debugging bus into the design. Once the watchdog timer would go off, the CPU would read out to the serial port the values from the internal Wishbone Scope. The routine to do this was carefully hand-crafted in assembly, so that I could be sure nothing would over-write any critical portions of the previous state.

I liked that debugging approach so much, that nearly every CPU design I’ve put together since has had a Wishbone Scope connection within it.

In this case, the watchdog timer felt useful but didn’t provide me with any of the key information I needed to debug the problem.

I needed more.

|

-

This brings me back to my Wishbone Scope.

As you may remember, the Wishbone Scope is a bus-based scope. It records any data you send it, and then stops some time following a trigger after which you’ll need to read the data out via the bus. It is not a JTAG scope, such as Xilinx’s ILA, but rather a scope that acts more like an integrated peripheral to the design. This means that the CPU can configure it as part of any program it might be running.

The Wishbone Scope has three big limitations.

-

First, since it is a bus-based scope, your design needs a working and dependable peripheral bus within it in order for it to work.

While the Wishbone scope. was built around the Wishbone bus specification, I have other versions for both Avalon and AXI-lite busses.

-

Second, because it is a bus-based scope, it can only capture the width of the data bus on every clock period. Since my busses are all 32-bits wide, this scope will only ever capture 32-bit debugging words at any given time.

Yes, I keep telling myself that it would be easy to strap two scopes together, but the reality is that I have never done the software work necessary to make that possible.

But what to capture? I’d like to capture every time a register is written, both the name of the register (5-bits) and the value written to it (32-bits). This busts my 32-bit limit, and I’m not even done with my requirements yet. I’d also like to capture bus requests. In the case of a bus read, I’d like to capture the read address (32-bits) and the returned value (32-bits, but on a later clock). In the case of a write, I’d want to capture both the address (32-bits) and data written to the bus (another 32-bits). I’d also like to be able to “see” how long it takes the bus to return a value (i.e. CYC, STB, WE, STALL, and ACK, or about 5 bits). What else? I’d definitely like to capture any branch targets (32-bits), as well as all of the internal pipeline control flags within the the CPU.

It should be pretty obvious that all of this information won’t fit within a single 32-bit word.

My current solution to this problem is to multiplex several words together depending upon the conditions.

a. If the CPU is halted, set the 32’bits to be the pipeline control signals.

b. If a register is written, record which register within the set, and 26-bits of that registers value

Fig. 6: Looking back into the past Fig 6. above shows the format of this word. It starts with a

Tbit, to indicate if this was the word that triggered the scope. The0following identifies this encoding. It’s then followed by 4-bits identifying the register, and then 26-bits of the registers value.c. Anytime the pipeline is cleared, such as if we are jumping to a new address, record the bottom 28 bits of the address. (Ignore the subword address bits, though, since the ZipCPU can only jump to addresses on word boundaries.)

Fig. 7: On any jump, record the jump address d. Otherwise, if there is a memory operation taking place this cycle, return 27-bits of the data being written (if it is a write cycle), otherwise return 27-bits of the address.

Fig. 8: On any memory operation, record either the address or the value e. Finally, if all else fails, just record the internal CPU pipeline control signals.

These get kind of cluttered, so I’m not going to try to draw them here. Feel free to check out how

dbg_flagsare set within the ZipCPU if you are interested.That’s four different possibilities, all nearly 32-bits in size. The first couple bits of each of these possibilities, stored in the MSBs, are used to decode which of these possibilities was recorded.

One sad reality, though, is that my VCD writing script can’t handle non-constant bit-mappings. In other words, when I need to examine the captured data, I’ll end up looking at an ad-hoc textual (printf) output describing what’s going on.

It works, but it isn’t pretty.

-

The third limitation with any internal logic analyzer, whether it be a Wishbone Scope or something else, is that the size of the memory buffer has to compete with any other block RAM requirements within the design.

In other words, even though the Wishbone Scope can record values from within any design at the speed of the CPU’s clock, it cannot do so for very long. The only way to capture relevant data therefore is to use some kind of trigger signal in order to tell us when to stop recording, as I illustrated in Fig. 5 above.

The other unfortunate consequence of adding a scope into a design is that you might need to adjust how much block RAM the CPU has available to it. Using AutoFPGA, this is the easy part. One value controls the size of the block RAM, and re-running AutoFPGA will adjust the address space, adjust the pointer of where the block RAM is located that’s used by the bootloader, and build us a new linker script to be used when we’re using this smaller amount of block RAM. Then, later, when we remove the scope and change the block RAM usage number back, we’ll return to our prior/original configuration.

As with adjusting the block RAM size, the scope size can also be adjusted by just changing a single number in the scope configuration.

Even better, the scope can be added (or removed) but just adding (or removing) the scope’s configuration filename to/from the AutoFPGA command line. All of the files then depending upon the scope can be rebuilt, and pre-processor macros will be set so they can be built knowing whether or not the scope is built into the design or not.

-

In the end, it was the wishbone scope, together with formal methods, that finally helped me find the bug.

To do this, I had to modify the trigger for the scope. That’s the next part of this story.

Normally, I have the CPU’s scope trigger off of any internal exception that would cause the CPU to halt unnaturally.

initial debug_trigger = 1'b0;

always @(posedge i_clk)

debug_trigger <= (!i_halt)&&(o_break);This wouldn’t work in this case, because the whole problem was that the CPU hung without properly halting.

So I adjusted my trigger logic. I chose to count the number of clocks since the last valid instruction retired.

Within the ZipCPU, any time a

non-memory instruction retires, it sets the alu_pc_valid flag. This tells

the CPU

that the program counter

needs to be updated. If alu_pc_valid isn’t true for many cycles, then

we can conclude that the CPU

must be hanging.

// Look for a hang of 2048 cycles

reg [10:0] dbg_trigger_counter;

always @(posedge i_clk)

if (alu_pc_valid)

dbg_trigger_counter <= 0;

else

dbg_trigger_counter <= dbg_trigger_counter + 1'b1;

initial debug_trigger = 1'b0;

always @(posedge i_clk)

debug_trigger <= ((!i_halt)&&(o_break))

||(&dbg_trigger_counter);That’s the idea.

The problem is that I only want to know when the CPU hangs. What if it hasn’t been started in the first place? What if I stop it in the debugger? What if the CPU is in sleep mode waiting for an interrupt?

I needed to adjust the counter just slightly to avoid these cases.

always @(posedge i_clk)

if (alu_pc_valid)

dbg_trigger_counter <= 0;

else if ((!i_halt)&&(!sleep))

dbg_trigger_counter <= dbg_trigger_counter + 1'b1;Now the scope will trigger after 2048 clocks of the CPU not updating the program counter.

But would this trigger on the bug?

The Trace Result

Yes, the scope triggered! The next time I ran the simple_ping program on the CPU it hung as I hoped and expected. As a result, I could check the trace capability and see that, yes, the scope had triggered and there was a trace waiting for me. (Yes, I’m skipping over writing the software to read from the scope and to decode its results–we’ve already discussed that before.)

Since we are working with text, and not VCD files, every line shows one more clock step of what’s going on. The lines will start with the location within the scope’s buffer, followed by the 32’bit word located there. After that, the line follows a custom ad-hoc decoding script.

6059 04012512: R1 <- 0x.0012512

6060 0c7b3cd9: R3 <- 0x.07b3cd9

6061 0c7b3cda: R3 <- 0x.07b3cdaWe’ll pick it up at line 6059 out of 8191. While it can be annoying to page through thousands of lines of output just looking for the bug, in this case, the bug was at the bottom of the trace. Further, once the CPU halted, all the trace words were identical–making it easy to quickly scan about two pages of information from the bottom of the trace to find the bug.

At line 6059, the trace looks fairly normal.

The CPU

simply writes to registers R1, then R3, and then R3 again.

Then there is a cycle where nothing is written to the registers. This is

likely the result of a CMP (compare) instruction. Such an instruction

consumes one clock, but doesn’t write to any register values.

6062 5885b440: FLAGS 5885b440 CE[DOA ] V[PDO ] MCE |

This FLAGS response contains a large bit-field. To help understand it,

I’ve shown a model of the

ZipCPU pipeline in

Fig. 9 on the right.

I’ve tried to break out some of the trace fields in the line above.

For example, the V[*] section tells me which pipeline stages have valid

entries within them: the prefetch, decode, and read-operands

stage. It also tells

me that the master CE (MCE) signal, the signal that controls whether any

instruction transitions from the read operands stage to the execution units,

is high. Given that we have valid instructions, and MCE is high, the

CPU is running at this trace point.

Finally, the trace line shows me which pipeline stages are advancing. These

are the ones with their respective *_ce flags

high.

As we discussed before, the basic pipeline operation follows as,

always @(posedge i_clk)

if (clear_pipeline)

stage_valid <= 1'b0;

else if (stage_ce)

stage_valid <= prior_stage_valid;

else if (next_stage_ce)

stage_valid <= 1'b0;

always @(posedge i_clk)

if (stage_ce)

begin

// Copy data from the previous pipeline

// stage to this stage, operating on it as

// necessary

endIf the stage’s CE line is high, the instruction moves from the previous

stage into the current stage stage.

In this case, instructions will be advancing from the Prefetch to the

Decode stage, from the Decode stage to the read Operands stage, and

from the read Operands stage to the ALU stage. That’s the meaning of

the cryptic CE[DOA ] field. This is all good: the

pipeline is full,

and the CPU

is operating normally–it just so happens that it is not writing to any

register values on this cycle. Again, this is normal.

Following the compare (CMP) instruction, there’s a

branch instruction.

This shows up as taking two clocks. The first one is where the

program counter

is given a new value. This is the same register write format we discussed

above in Fig. 6. The second line shows where

the CPU actually sets the

program counter and

clears the pipeline.

This is the same format we showed in Fig. 7 above.

6063 3e801814: PC <- 0x.2801814

6064 41a00605: JMP 0x06801814At this point, we can finally identify where in the program code we are at. By examining the disassembly of our simple_ping program, we find that we are in a tight loop waiting for a network packet to be received, or for the seconds-hand on the real-time clock to advance.

while(((_netp->n_rxcmd & ENET_RXAVAIL)==0)

&&(_rtc->r_clock == rtc))

user_heartbeats++;Here you can see where I set one of those global

variables, user_heartbeats.

This allowed me to tell, when using the

global variable

debugging approach, when the program was hung, since this value would stop

changing.

Following the conditional

branch,

the CPU needed to clear its

pipeline. This

required a couple of clock cycles, which are shown in the trace lines below.

As before, the CE[] block shows which pipeline stages, Decode, Operands,

or ALU are accepting data, whereas the Valid block shows which stages

actually have valid instructions within them. Hence, we are now watching

a new instruction stream move into the

pipeline.

The first of these new instructions will eventually write to the R2 register,

as shown below.

6065 58810000: FLAGS 58810000 CE[D ] V[ ] MCE

6066 58850000: FLAGS 58850000 CE[D ] V[P ] MCE

6067 5885a000: FLAGS 5885a000 CE[DO ] V[PD ] MCE

6068 5885b400: FLAGS 5885b400 CE[DOA ] V[PDO ] MCE

6069 087b3cda: R2 <- 0x.07b3cdaThe next instruction is a

load word instruction.

This instruction issues a

bus

transaction to read from location 0x03800000 in memory.

6070 70e00000: MEM-R[0x.3800000] -> (Not Givn) (GBL)While the scope hasn’t captured all 32-bits of that address, I can infer it from the fact that my design doesn’t have more than 27-bits of address space, and so the number of bits is sufficient.

This read polls the network interface, to query if any packets have been read.

The (GBL) flag indicates that this read is from the global address space,

rather than the processor’s local peripheral address space.

As you might imagine, reading a value from the bus stalls the processor. While there are waiting instructions in the prefetch, decode, and read operands stages, nothing moves forward until this result comes back–lest the CPU need to undo and re-perform any instructions. (The ZipCPU is not an “out-of-order” machine.)

6071 5884d00c: FLAGS 5884d00c CE[ ] V[PDO ] MCE DCD-STALL MEM-BUSYWhile it is waiting, we get another flags trace line. In this case, we can see

that the memory unit is busy, MEM-BUSY. DCD-STALL is an indication that

there is an instruction in the decode stage of our

pipeline

that is stalled. We’ll come back to this later.

The memory instruction takes about five clock cycles. During this

time, nothing changes within the CPU,

and so the wishbone

scope

control program

outputs the line **** **** so we can tell that nothing is changing.

Once the memory read operation is complete, the result is written to R1.

**** ****

6076 04020000: R1 <- 0x.0020000So far this all looks good. We haven’t hit the bug yet.

The CPU then issues an AND instruction, to check whether or not the network interface has received any packets. The result of this instruction is zero: there are no pending packets waiting.

6077 04000000: R1 <- 0x.0000000Based upon the zero flag, we then hit a conditional branch, and so we need to clear and load the pipeline again.

6078 3e80182c: PC <- 0x.280182c

6079 41a0060b: JMP 0x0680182c

6080 58810000: FLAGS 58810000 CE[D ] V[ ] MCE

6081 58850000: FLAGS 58850000 CE[D ] V[P ] MCE

6082 5885a000: FLAGS 5885a000 CE[DO ] V[PD ] MCE

6083 5885b020: FLAGS 5885b020 CE[DO M] V[PDO ] MCEThis time, we’re going to read from address 0x05800060: the

real-time clock register.

6084 71600018: MEM-R[0x.5800060] -> (Not Givn) (GBL)

6085 5884d00c: FLAGS 5884d00c CE[ ] V[PDO ] MCE DCD-STALL MEM-BUSY

**** ****

6090 04012512: R1 <- 0x.0012512The result, 0x012512, from the clock

is in Binary Coded Decimal, or

BCD. You might

read it as 1:25:12. It indicates that it has now been one hour, twenty five

minutes, and twelve seconds since this design was initially loaded. (No,

I never set the clock to real time–I was more focused on debugging all

this time.)

Again, so far this looks normal.

Then something different happens. The trace ends.

6091 5884d000: FLAGS 5884d000 CE[ ] V[PDO ] MCE DCD-STALL

**** ****

8127 d884d000: TRIG FLAGS d884d000 CE[ ] V[PDO ] MCE DCD-STALL <--- TRIGGER

8128 5884d000: FLAGS 5884d000 CE[ ] V[PDO ] MCE DCD-STALL

**** ****

8191 5884d000: FLAGS 5884d000 CE[ ] V[PDO ] MCE DCD-STALLNotice the trace location values: 6091, 8127, 8128, and then 8191. These are

key. 6091 is expected–that’s the next clock cycle. 8127 is unexpected.

That means that we just spent 2036 cycles doing nothing.

Wait, I thought we set the CPU to count 2048 cycles before setting the trigger on our scope? How did the count end up at 2036?

It ended up at 2036 because we only counted ALU results, not results from the memory unit. Our last valid ALU result was on cycle 6078, we were still good here.

Or rather, we’re not good here: the CPU just stopped.

The good news is that we know what instruction we were executing. If you run

objdump on our executable, you can see an assembly listing showing the

instruction we just completed, and the context around it. To generate a

listing, just type,

% zip-objdump -S -D simple_ping > simple_ping.txtIndeed, I find listings of this type so valuable when debugging, that I have a special make target just to create listings. The target looks like:

%.txt: %

$(OBJDUMP) -S -D $^ > $@This will get turned into the command above any time I type

% make simple_ping.txtMake is smart enough to recognize, when I

type this, that if the source code for simple_ping has changed then it needs

to be rebuilt before dumping the assembly to simple_ping.txt.

Sadly, I’ve recompiled this routine since capturing my trace. As a result, the assembly below that matches the loop in question doesn’t quite match the trace. Still, this was roughly the instruction we halted on.

7001808: 84 a8 97 88 LW (R5),R0 | MOV R1,R2The critical piece of this disassembly is that we were trying to execute a

two-instruction word, containing both a load word

instruction (LW)

followed by a register copy

(MOV) instruction.

(ZipCPU

assembly

reads left to right, hence the MOV instruction copies R1 into R2.)

|

When I introduced the ZipCPU

ISA,

I didn’t say much about compressed instructions. Now, however, you need

to know a little bit about them to know what’s going on. Fig. 10 at the right

shows my quick reference sheet of

ZipCPU

instructions. The first line shows the basic format of most instructions.

The second line shows the format of a compressed instruction. It starts with

a 1 in the MSB, followed by fifteen bits containing the first instruction,

an unused bit, then fifteen bits containing the second instruction. The

opcodes for these compressed instructions are shown in the top half of the

third column: SUB, AND, ADD, CMP, LW, SW, LDI, and MOV.

ZipCPU compressed instructions are a little different from other architectures, because you cannot jump halfway into a word. All instruction words, whether compressed or not, are all word aligned. The assembler handles the compression, making certain for example that branch targets never get compressed into the middle of a compressed instruction word.

Further, the

ZipCPU disassembler displays both

instructions within a compressed instruction word on the same line, using

a | to separate them–as shown above.

This will all come into play in a moment.

From the trace we know that we executed the LW instruction, but that the

MOV instruction never made it into the execution unit(s).

Why not? What happened?

Moving from trace to bug

When I finally got to this point, I had no idea why the CPU might stall like that.

Yes, it is my CPU. Yes, I did write the code for it. Yes, the stall logic is all my own handiwork. Yes, I did just create this trace. However, when a design gets over 2500 lines, when you wrote it years ago, when it has multiple options it can be built with … it can become difficult to remember all the details. Worse, even if you do remember them, do you know why this happened?

So I turned to formal methods.

Instead of proving that the CPU “worked”, I wanted to prove that the CPU would never stall like this. Hence, I wanted to say that: if there’s an instruction in the read-operands stage, the CPU should always proceed to the execution stage.

So how might we write such an assertion?

Well, if there’s a valid instruction in the read-operands stage, then it should proceed to the execution stage, right?

always @(*)

if (op_valid)

assert(adf_ce_unconditional | mem_ce);Here adf_ce_unconditional says the instruction will move forward into the

ALU, DIVide or (not-yet-written) FPU (floating point unit), whereas

mem_ce says the instruction will move into the memory unit.

Only, that’s not quite right. We don’t want to move forward if the

debugging unit

has requested that we halt. In that case, master_ce will be low.

We also don’t want to move forward on a reset, or anytime the

pipeline

is cleared. Further, we don’t want to move to the execution stage

if any of the execution units is busy and its result might affect one

of our operands.

So, let’s adjust our assertion.

always @(*)

if ((op_valid)&&(master_ce)&&(!clear_pipeline)&&(!i_reset)

&&(!div_busy)&&(!div_valid)

&&(!mem_busy)&&(!mem_valid)&&(!bus_err)

&&(!alu_busy)&&(!alu_pc_valid)

&&(!fpu_busy)&&(!fpu_valid)&&(!fpu_error))

assert(adf_ce_unconditional | mem_ce);This assertion fails.

It has? Aha! Have I found my bug?

I pulled up the trace to look. This time, it’s a VCD file created by SymbiYosys. No, this assertion hasn’t found the bug. Instead, all I found were examples of other reasons why the instruction shouldn’t move forward.

The execution units shouldn’t accept any more instructions if …

-

If this is a

BREAKinstruction,op_break, the pipeline needs to stop just before the instruction gets to the execution unit. -

If the CPU has halted on an error,

o_breakwill be true and we’ll need to make sure we are halted. -

If we just retired an illegal instruction, we need to halt. Similarly, if the last memory instruction returned a bus error then we need to halt as well.

-

If there’s an interrupt pending or a trap was just executed,

w_switch_to_interruptwill be true and we’ll need to switch to supervisor mode. In this case, the instruction in the read operands stage won’t move forward into any of the execution units either.

The list goes on. In the end, I was surprised at how long it was.

always @(*)

if ((op_valid)&&(master_ce)&&(!clear_pipeline)&&(!i_reset)

&&(!div_busy)&&(!div_valid)

&&(!mem_busy)&&(!mem_valid)&&(!bus_err)

&&(!alu_busy)&&(!alu_pc_valid)

&&(!fpu_busy)&&(!fpu_valid)&&(!fpu_error)

&&(!op_break)&&(!o_break)

&&(!w_switch_to_interrupt)

&&(!alu_illegal)

&&(!ibus_err_flag)&&(!ill_err_i)&&(!idiv_err_flag))

assert(adf_ce_unconditional | mem_ce);At this point, however, SymbiYosys had found my bug for me.

The problem was specific to the compressed instruction word. In the ZipCPU ISA, two instruction words may optionally be packed into one, and the ZipCPU has no ability to restart any instructions mid-word (currently). I may need to come back and “fix” this later, but the approach was chosen to simplify the instruction decoder: all instruction words start on word boundaries, some instruction words contain two instructions.

This was why the debugger failed: it had to wait for the second part of the compressed instruction word to complete before the CPU would halt, since there is (currently) no way to restart the CPU mid-instruction word.

This also means that I cannot jump into an interrupt mid-word.

The internal flag, w_switch_to_interrupt properly captures this logic.

The stall signal, however, did not. That meant that any time the interrupt

signal went high in the middle of a two-instruction word, the interrupt logic

would wait for the second instruction to complete while the stall logic would

prevent the second instruction from starting.

The conflicting logic lead to the deadlock causing the CPU to hang.

The fix was simple: add a check to the stall logic so that we only stall if an interrupt is pending and we are on a single-instruction word or the second instruction of a double-instruction word.

Once fixed, my network ping program worked as intended again.

Wasn’t the CPU Formally Verified?

Some time ago, I wrote about all of the bugs I found when formally verifying this CPU. I even went to ORCONF 2018 to discuss all of the lessons I learned while going through this process.

Shouldn’t formal verification have caught this bug?

Yes, I suppose it should have.

Why didn’t it?

Because I never created a property asserting that the CPU would never stall indefinitely.

I’ve now gone back to the proof and added a similar property to ensure that the CPU will never stall in the decode stage either.

This wasn’t the first time I found a bug in my now formally verified CPU. The first time was when I tried setting the program counter from the debugging port. You may recall, I mentioned fixing this bug above. This oversight happened for the same reason: My initial set of formal properties was incomplete.

The CPU now has a set of debugging interface properties, “proving” that the debugger can set and adjust the CPU’s state.

What about simulation?

What about simulation? Could this bug have been caught during simulation? Why wasn’t it?

I suppose this bug could have been caught in simulation.

However, my current network packet simulator implements a loop-back mode only. This isn’t sufficient for testing the complex interaction of ARP requests, ARP replies, and ping requests and replies.

An online friend has recommended I try building a network simulator that integrates into the Linux Kernel, so that other computers can actually interact with my design while it is running in the simulator. This would be a truly awesome idea, and might well have caught this bug. I might just need to update my network simulation for this purpose.

Conclusions

So what are some of the lessons we can learn from this whole exercise?

First, your current bug may not be in the last place you looked. I had just worked on the flash controller. Mentally, my mind was in a bug hunting mode within the flash controller. Instead, the bug was somewhere else within my design–somewhere where I least expected it.

Second, the more things you change within a design, the more painful it is to find and fix the bugs within it. In many ways, I set myself up for problems with the shear number of things I had changed to get here. Understand, just because I presented one or two of these problems above, doesn’t mean that these were the only problems I had.

Third, did you notice how many tools I was using to debug the design? Besides the tools for debugging the CPU listed above, I was also using Wireshark for debugging the network as well.

Finally, debugging a CPU really crosses several disciplines. While this is a “hardware” task, look at what we needed to do in terms of support software along the way: We needed a debugging port to the CPU (S/W+H/W), a scope to gather a trace from within the CPU (H/W), software to interpret that trace (S/W), a disassembly of the failing program (compiler S/W), an understanding of the ZipCPU instruction set (assembly S/W), and finally formal methods to finally catch the bug in the end.

That’s quite the cross-disciplinary task!

This also means that, if you are interested in learning hardware design, there are several prerequisites before you dive into CPU design. The other way of looking at it is that CPU design is a wonderful field of study where you will be forced to learn all kinds of details of how CPUs work that you would never learn otherwise.

And he informed me, and talked with me, and said, O Daniel, I am now come forth to give thee skill and understanding.