Formally Verifying a General Purpose Ultra-Micro Controller

At long last, I have formally verified a DDR3 SDRAM controller. Sadly, I haven’t yet had the time to verify its operation on real hardware. Worse, the I/O isn’t quite there yet so I don’t expect it will work … yet.

For those who don’t know the story, I built a DDR3 SDRAM controller years ago. These were back in my stubborn days, before I was ever willing to use any vendor modules. I then built a Wishbone controlled DDR3 SDRAM controller, and attempted to instantiate it on my Arty board.

Let’s just cut the story short: it wasn’t a successful project.

There’s a world of difference between the logic used to drive the DDR3 SDRAM and the actual wires and protocol required by the DDR3 SDRAM. That world of difference lies in the byte-strobe signals, but that’s a story for another day.

|

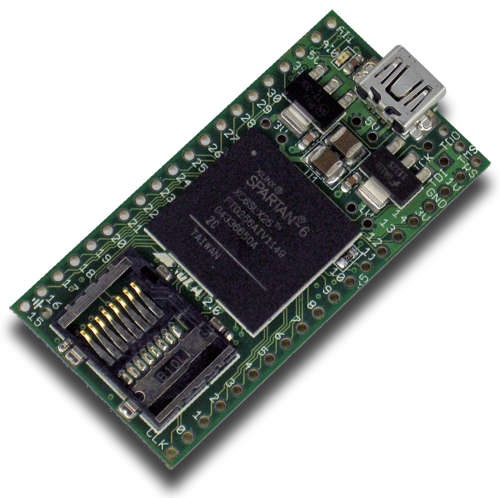

Prior to this DDR3 SDRAM controller, I had built an SDRAM controller for my XuLA2 LX25 board. It wasn’t all that had to do, and so I thought I would try my hand at a DDR3 SDRAM controller. Since this was a second generation Dynamic RAM (DRAM) controller for me, I came into this project knowing a thing or two regarding what had worked before and what hadn’t. (Or at least, so I thought.) I also came into the project expecting to run at a 200MHz system clock. Then it was a 100MHz clock, then an 80MHz clock, then … my motivation fell apart. This was to be expected in so many ways–especially since the project was never funded in the first place and it cost me several months of work.

Recently, however, I’ve had the opportunity to dig up the design again. Actually, I’ve had the opportunity to dig into both the SDRAM design and the DDR3 SDRAM design. I modified the SDRAM controller to handle ISSI’s SDRAM chip, as found on the new IceCore board from myStorm as well as started working to port my controller to the DDR3 SDRAM chip found on Lattice’s ECP5 Versa Board. That is, until my project funding ran out again.

One of the big things that has changed between my early work with DRAM controllers and now is that I’ve discovered formal verification. Neither of my original cores were formally verified initially. Indeed, one of my early formal verification stories is of trying to verify the (non-DDR) SDRAM controller and discovering a corner case where the controller might’ve accessed the wrong piece of memory.

That would’ve been hard to debug.

Today’s discussion, however, focuses on a piece of logic found within each controller–something I’m going to call an ultra-micro controller.

The Purpose of the MicroController

There are actually two ultra-micro controller’s found within each of the updated DRAM controller designs. One of them is responsible for the reset logic sequencing, and the other for the refresh logic. But I’m getting ahead of myself. Let’s start with the basic question, what is a “MicroController”?

Wikipedia currently defines a MicroController as

a small computer on a single integrated circuit on a single metal-oxide semiconductor (MOS) integrated circuit chip. In modern terminology, it is similar to, but less sophisticated than, a system on a chip (SoC); an SoC may include a microcontroller as one of its components. A microcontroller contains one or more CPUs (processor cores) along with memory and programmable input/output peripherals. … (Yes, Wikipedia’s definition even changed while I was writing this!)

This is way more sophisticated than what I am talking about today. That’s why I’m going to use the term “ultra-micro controller” today, I’m not talking about anything that would rank as I high as a full-blown CPU or even anything with external memory. Instead, I’m referring to something a little more sophisticated than a finite state machine, but not nearly as sophisticated as a full CPU. The ultra-micro controller I’m talking about today does nothing but follow a simple fixed script, setting a single register along the way. This script is the unique feature that separates it from a more traditional finite state machine.

I’m going to use this in two contexts initially, and probably many others later. These are the reset sequence and the refresh sequence of my DRAM controllers. Here’s the basic idea, as applied to the reset sequence: when you read through the data sheet for a memory chip, you’ll discover that there’s a very specific sequence of events that needs to take place from power up to the first memory access. This sequence includes timing relative signals given to the core. For example, many SDRAM chips require a PRECHARGE-ALL common (initializes all of the internal memory banks to idle), followed by a REFRESH command and then finally a SETMODE command to set the settings within the chip, telling the chip how the controller will interact with it. Only after all three of these commands have been issued is the memory ready to use.

There’s one other key detail, and that is that those three startup commands, PRECHARGE, REFRESH, and SETMODE, all have particular timing relationships between them. For example, there may need to be an adjustable number of idle cycles between the PRECHARGE command and the following REFRESH command and so forth.

That’s one of the two ultra-micro controllers I needed for these two DRAM controllers.

The other one I’m using to handle the refresh logic. If you aren’t familiar with DRAM, then you need to understand first that it’s built out of capacitors. If the capacitor is charged, that particular bit of memory is a one else its a zero. Building memory out of capacitors makes it possible to build memories with very low area and low power. The problem with using capacitors to hold memory is that the charge within any capacitor will decay over time. It leaks. If each capacitor is not re-charged periodically, they will return to zeros, losing any data you might have stored within them. To keep this from happening, the controller needs to follow an internal schedule of commands within it–periodically pulling the DRAM off line, idling all the RAM banks, issuing a REFRESH command, and then putting them back on line.

This is all well and good, but my problem was associated with the giant process it took to calculate the memory commands.

To see how bad it got, here’s a look at what my initial draft looked like:

always @(posedge i_clk)

if (in_reset_sequence)

begin

// The reset state machine

//

if (reset_condition_one)

o_cmd <= SETMODE;

else if (reset_condition_two)

o_cmd <= PRECHARGE_ALL;

else if (reset_condition_three)

o_cmd <= REFRESH;

else

o_cmd <= NOOP;

end else begin

// ...

if (need_refresh)

begin

if (first_condition)

// Wait since the last precharge

o_cmd <= NOOP;

else if (second_condition)

// Precharge all banks

o_cmd <= PRECHARGE;

else if (third_condition)

// Wait for all banks to become idle

o_cmd <= NOOP; // Wait

else if (fourth_condition)

// Issue the refresh command

o_cmd <= REFRESH; //

// ...

else begin

// Another large state machine

// To handle read and write commands

end

endThe problem with this giant always block was that the state machine it generated was so complex it struggled to pass timing at any decent clock speed. Placing three separate state machines into this same logic block, one for reset, one for refresh, and one for reading and writing the RAM was … just too much for the hardware to handle in the time allotted to it.

I needed to simplify my design. I needed to pipeline some of this logic. (Okay, switching to a proper FSM state variable would’ve helped some as well.)

Eventually, I decided that I should separate this maintenance logic, that’s the word I’m using for both reset and refresh logic, from the rest of this giant logic block. Along the way I noticed that all of the maintenance logic was independent of any actual bus requests. I could run it a clock ahead of when it actually needed to be used. In other words, I could pre-calculate maintenance commands before they would be used and so simplify things. For example, I could have a reset logic block,

// The reset state machine

always @(posedge i_clk)

if (reset_condition_one)

reset_cmd <= SETMODE;

else if (reset_condition_two)

reset_cmd <= PRECHARGE_ALL;

else if (reset_condition_three)

reset_cmd <= REFRESH;

else

reset_cmd <= NOOP;together with a separate and independent block to calculate the refresh command sequence,

always @(posedge i_clk)

if (first_refresh_condition)

// Wait since the last precharge

refresh_cmd <= NOOP;

else if (second_refresh_condition)

// Precharge all banks

refresh_cmd <= PRECHARGE;

else if (third_refresh_condition)

// Wait for all banks to become idle

refresh_cmd <= NOOP; // Wait

else if (fourth_refresh_condition)

// Issue the refresh command

refresh_cmd <= REFRESH; //I could then combine these two logic sequences together,

always @(posedge i_clk)

if (reset_logic)

maintenance_cmd <= reset_cmd;

else

maintenance_cmd <= refresh_cmdWhy would I do all of this? To simplify the giant always block, and to

maintain a high clock

speed. Now,

instead of having multiple separate logic checks within one giant always block,

I can instead just check for the flag

maintenance_mode

which would indicate I wanted to issue either a command from the reset sequence

or the refresh sequence.

always @(posedge i_clk)

if (maintenance_mode)

begin

// Enter here during either reset or

// refresh

o_cmd <= maintenance_cmd;

end else begin

// ...

// The large read/write state machine to read from memory

endThis greatly simplified the logic, but I now needed special state machines for both reset and refresh sequences.

To make this work, I just wanted a simple core that would do nothing but walk through the various reset or refresh commands in the simplest logic possible.

|

Hence the need for this ultra-micro controller.

The microncontroller itself worked off of a very basic 3-stage pipeline, as shown in Fig. 2. First it would calculate an address.

always @(posedge i_clk)

r_addr <= r_addr + 1;It would then read a command from that position in the command sequence.

always @(posedge i_clk)

r_cmd <= command_memory[r_addr];Finally, it would act upon that command, setting internal registers.

always @(posedge i_clk)

begin

in_refresh <= 0;

if (r_cmd[0])

in_refresh <= 1;

refresh_cmd <= r_cmd[4:1];

stall_bus <= r_cmd[5];

// ... and so on

endWell, … not quite. Often the startup or refresh sequence would require delays between particular states. Let’s dig into that more in a moment.

For now, notice two things. First, this approach drastically simplified my processing. Second, getting this core to pass induction requires a little bit of a trick–I’ll share that in a moment.

Indeed, if it weren’t for the neat trick required to pass induction, I wouldn’t be writing this article. The core itself is embarrassingly simple.

Adding a counter

The biggest problem with the logic above is simply that the various commands also have delays and durations associated with them. For example, on ISSI’s SDRAM chip, nine clocks need to separate any REFRESH command from any command following. Our simple logic above would require 8-separate NOOP commands in our sequence to capture that.

|

Alternatively, we could add a second type of instruction–a “wait” instruction, with an integrated number of wait clocks. This would then become an ultra-micro controller with two op-codes: the first for setting output registers, and the second for starting a wait counter. You can see these two codes shown in Fig. 3 on the right.

This would change our logic above slightly. For example, we’d only advance the address pointer to the next instruction if the delay counter had already finished counting down to zero.

always @(posedge i_clk)

if (counter == 0)

r_addr <= r_addr + 1;Similarly, we’d only fetch the next instruction if the delay counter were zero as well.

always @(posedge i_clk)

if (counter == 0) case(r_cmd)

0: r_cmd <= command_zero;

// ....

endcaseWe’d also only update the registers controlled by this sequence if the delay counter were zero, and the next command didn’t request a delay.

always @(posedge i_clk)

if ((counter == 0) && (!r_cmd[OPCODE]))

begin

in_refresh <= 0;

if (r_cmd[0])

in_refresh <= 1;

refresh_cmd <= r_cmd[4:1];

stall_bus <= r_cmd[5];

// ... and so on

endcaseWith all of that out of the way, the only new feature remaining is the counter itself. In this case, on any new delay instruction we set the counter to the value given in the instruction, otherwise if the counter is greater than zero we count down.

always @(posedge i_clk)

if ((counter == 0) && (r_cmd[OPCODE]))

counter <= r_cmd[OPCODE-1:0];

else if (counter > 0)

counter <= counter -1;The counter really is that basic. Indeed, perhaps you’ve noticed that many of my formal verification quizzes started out with verifying the properties of a similar counter.

At least that’s the basic design. We’ll come back to it in a moment and fill in the details.

The Problem with Induction

If you’ve been reading my blog for a while, you may remember how I’ve described the problem associated with induction before. Induction is important enough that we can’t really skip it. Without induction you cannot formally verify anything beyond the reset sequence. Without induction, you cannot prove that the properties in your design never fail.

Well, okay, that’s a debatable presumption. I should point out that there are others who don’t use induction as regularly as I do. For example, Clifford Wolf, the author of the picorv32 RISC-V CPU, Yosys, SymbiYosys, and the riscv-formal set of formal properties for RISC-V CPU verification tends not to use induction as much. Indeed, a strong argument can be made for not using induction: it’s complex, hard to learn, it requires its own way of thinking, and for certain problems a bounded model check can be equivalent.

We can illustrate this with a basic CPU. Suppose we had a CPU with a 9-stage pipeline. Chances are we could fully verify it in 20 clock cycles. I’ll admit, I’m guessing with these numbers, but there is a mathematical way to determine this distance and I think I can argue that the minimum bounded check needs to be longer than a full operation. That said, I often find myself verifying cores with minimum cycle lengths that last longer than 256 cycles, such as these AXI MM2S or S2MM cores. Simply put, induction is an important tool, but it does have its place.

When you use

induction, you

will find the traces shows the appearance of having started the design

somewhere between the initial state and eternity. However, this view of

induction

often frustrates engineers who use it, because they’ll point out that X

must happen before Y gets set, but somehow Y is getting set without

ever passing X. “But I initialized that value!” is a common refrain

that I hear.

The problem is that this is not how induction actually works. Induction works by piecing together some number of logic steps for your core that don’t break any assertions, and then tries to break your assertions on the last step. It only offers the appearance that the state chosen is in the middle of time. In reality, the “initial” values in the induction trace are arbitrary–they could be anything, with the only requirements that they don’t break any assumptions, and again that they don’t break any assertions until the last step.

As a result, because the induction step doesn’t start at the initial time step, constraining every register in the design becomes important. This includes the registers set by our ultra-micro controller.

To bring this point home, I had a couple of memory rules that weren’t passing induction. Things like, REFRESH commands that could only be issued once all the banks had been placed in their idle modes, or that PRECHARGE commands could only take place no earlier than some (configurable) number of clock cycles after ACTIVATE commands, etc.

The particular problem is common among pipelined processes: Unless the various pipeline stages are so constrained, the induction engine might start in a state where the stages have no relationship to each other. The ultra-micro controller might load a command to be decoded, and then the induction engine starts with a command in that register that’s not in the command set. Or it might start outputting a value that nothing in the command set would allow. Or it might do both.

Induction can seem kind of strange in that way.

The way we’ll solve this

induction

problem is by walking a microcode address through the various pipeline stages

of processing. We’ll use one value to describe the address pipeline stage,

f_addr, one for the command stage, f_cmd, and one to describe the currently

active outputs, f_active–the final pipeline stage. These three are all

addresses into our instruction stream.

reg [LGNCMDS-1:0] f_addr, f_cmd, f_active;We’ll set all three of these pipeline-addresses to zero initially and again upon any reset.

initial { f_active, f_cmd, f_addr } = 0;

always @(posedge i_clk)

if (i_reset)

{ f_active, f_cmd, f_addr } <= 0;In all other cases, we’ll increment the address whenever we step the ultra-micro controller forward by one step.

else if (w_step)

begin

f_addr <= f_addr + 1;

{ f_active, f_cmd } <= { f_cmd, f_addr };

endThe next step is to convert these addresses to commands. We can use a large

parameter to store these commands. If we have NCMDS commands, each WORDWID

wide, this might look like,

parameter [NCMDS*WORDWID-1:0] COMMANDS = {

// An arbitrary set of commands

{ 1'b0, 2'b00, 4'h0 },

{ 1'b0, 2'b00, 4'h3 },

{ 1'b1, 6'h02 },

{ 1'b0, 2'b00, 4'hb },

{ 1'b0, 2'b00, 4'h3 },

{ 1'b1, 6'h02 },

{ 1'b0, 2'b00, 4'ha },

{ 1'b1, 6'h2f },

{ 1'b0, 2'b00, 4'h0f }

};We can then use a simple Verilog function to turn this command address into its associated command.

function [WORDWID-1:0] getcommand;

input [LGNCMDS-1:0] i_addr;

getcommand = COMMANDS[i_addr * WORDWID +: WORDWID];

endfunctionWhile I don’t normally use functions, in this case the function is the key to this whole algorithm. Why? Because I can now reuse the function throughout to map addresses to commands.

// f_active_cmd is the command that's currently *active* and defining

// outputs

always @(*)

f_active_cmd = getcommand(f_active);

// f_cmd_cmd describes the command currently waiting to be interpreted

always @(*)

f_cmd_cmd = getcommand(f_cmd);

// f_prior_cmd is the command I was just executing before the current

// one

always @(*)

f_prior_cmd = getcommand(f_active-1);Because there is a single common mapping from address to command, used by both the logic of the core and the logic of the formal properties, I can assert that these copies of the various commands actually match what each stage of the algorithm should be doing.

Only one other big step is required: we’ll need to constrain all of these

addresses with respect to each other. You know, the f_cmd address must

follow f_addr, and f_active must follow f_cmd.

always @(*)

begin

assert(f_cmd + 1 == f_addr);

assert(f_active + 1 == f_cmd);

endOf course, this is only an overview. The actual logic, below, corrects

several details. For example, assert(f_cmd + 1 == f_addr); will fail if

f_cmd is about to roll over to the next bit. Similarly, my reset sequence

needs to be run only once starting from a reset command, whereas the refresh

sequence needs to repeat over and over forever. The main core of the logic,

however, remains as simple as it always was.

Shall we take a peek at how this is done?

MicroController Logic

Let’s look over the basic logic of this really simple ultra-micro controller.

First, since I only want to build this once, I’ve chosen to make it highly configurable. The core accepts a parameterized number of command bits and again a parameterized number of delay bits.

module genuctrl(i_clk, i_reset, o_cmd);

parameter CMDWIDTH = 4;

parameter LGDELAY = 6;Similarly, the core accepts a power of two length command set.

parameter LGNCMDS = 3;

localparam NCMDS = (1<<LGNCMDS);Every word in our command set needs to have at least CMDWIDTH bits,

LGDELAY bits, plus one more bit for the opcode if LGDELAY indicates

we’ll use a delay counter in our opcode.

localparam WORDWID = ((LGDELAY > 0) ? 1:0)

+ ((CMDWIDTH < LGDELAY) ? LGDELAY : CMDWIDTH);We can now create a fairly arbitrary set of commands using this format.

parameter [NCMDS*WORDWID-1:0] COMMANDS = {

//

// Commands read from the bottom (LSBs are first)

// up to the top (MSBs are last)

//

{ 1'b0, 2'b00, 4'h0 }, // Last command

{ 1'b0, 2'b00, 4'h3 },

{ 1'b1, 6'h02 },

{ 1'b0, 2'b00, 4'hb },

{ 1'b0, 2'b00, 4'h3 },

{ 1'b1, 6'h02 },

{ 1'b0, 2'b00, 4'ha },

{ 1'b1, 6'h2f },

{ 1'b0, 2'b00, 4'h0f } // First command

};We’ll also insist that the first command of this sequence, that’s the one on the bottom, start with an output-register opcode.

Ideally, I’d like to formally verify this core against all possible command sets. However, using a parameter to set these commands really keeps me from doing this. Why then would I use a parameter to define the commands?

For a couple of reasons. First, unlike memories, (and even ROM memories!) parameters cannot change their values during induction. Second, I am depending upon the properties of the resulting sequence to then pass verification once this core is incorporated into another as a submodule. My goal is to avoid turning this module into a black box that spits out unconstrained values. The parent module will depend upon these outputs and their sequencing for a successful formal proof.

We’ll also pick, arbitrarily, that the first command will set an initial state. All values throughout our processing chain will then get reset to this initial state on any reset.

localparam [WORDWID-1:0] INITIAL_COMMAND = COMMANDS[WORDWID-1:0];Finally, we’ll support two options: OPT_REPEAT and OPT_DELAY. OPT_REPEAT,

if set, will indicate that we want to keep repeating these commands over and

over–such as the refresh cycle. If OPT_REPEAT is clear, we’ll go through

this sequence once and stop on the last step–as the reset sequence will want

to do. OPT_DELAY on the other hand is a simple short hand for checking

whether or not we have one or more bits allocated to the delay counter.

If OPT_DELAY is clear, the counter will remain at zero and all commands will

proceed at the same pace.

parameter [0:0] OPT_REPEAT = 1'b1;

localparam [0:0] OPT_DELAY = (LGDELAY > 0);With that aside, we can move into the design itself.

Our first task will be to create a global step signal, w_step. If this

signal is ever true, all of our various states will step forward. Our goal

will be to set w_step so that it is only ever true if the counter is zero,

so that it can replace the r_count == 0 logic check above and simplify

our logic.

So here’s how this works. We’ll start with the wait count kept in r_count.

We’ll set this to zero initially so that the first command can always

contain the bits to output on any reset.

generate if (OPT_DELAY)

begin : DELAY_COUNTER

reg [LGDELAY-1:0] r_count;

initial r_count = 0;

always @(posedge i_clk)While the opcode might start with a delay, this turns out to be rather problematic, since it leaves the output registers undefined both initially and following any reset. Disallowing this simplifies our initial and reset logic therefore.

The reset should always bring this counter back to this same original state.

if (i_reset)

r_count <= 0;Every time the counter reaches (or stays at) zero, we’ll advance to the next

instruction. If that instruction, kept in r_cmd, contains a delay

instruction then we’ll set the counter to that delay, otherwise we’ll keep it

at zero. (Remember, w_step is equivalent to r_count == 0.)

else if (w_step)

begin

if (r_cmd[WORDWID-1])

// This is a delay instruction

r_count <= r_cmd[LGDELAY-1:0];

else

// This is a command instruction

r_count <= 0;Finally, if r_count != 0, we’ll decrement it until it does equal zero.

end else

r_count <= r_count - 1;In order to stay off of the critical timing path, we’ll set w_step so that

it’s equivalent to r_count == 0. That means that it’s logic will (roughly)

mirror that of r_count above, with only a few subtle differences.

initial r_step = 1;

always @(posedge i_clk)

if(i_reset)

r_step <= 1;

else if (r_step)

begin

if (r_cmd[WORDWID-1])

// This is a delay instruction

r_step <= (r_cmd[LGDELAY-1:0] == 0);

else

// This is a command instruction

r_step <= 1;

end else // if (!r_step)

r_step <= (r_count == 1);

assign w_step = r_step;While the logic above is fairly straightforward, getting it right and convincing

yourself that it’s right can be valuable. For this, we’ll set up a single

formal property to make certain that r_step is truly equivalent to

r_count == 0.

`ifdef FORMAL

always @(*)

assert(r_step == (r_count == 0));We can also check the counter against the currently active instruction. If the instruction is not a delay OpCode, then the counter must be zero.

always @(*)

if (!f_active_cmd[WORDWID-1])

assert(r_count == 0);Otherwise it must be less than the delay specified in the current command.

else

assert(r_count <= f_active_cmd[LGDELAY-1:0]);

`endifFinally, if the number of delay bits is zero, LGDELAY==0 and so !OPT_DELAY,

then the r_step signal should always be high.

end else begin

assign w_step = 1'b1;

end endgenerateThat leaves three primary steps left to this algorithm.

The first is to calculate the next command address. Because we have chosen a super simple command set, there are no jumps, no pipeline stalls, nothing but the next address. That means we can keep this logic as simple as initializing the address to zero and then perpetually incrementing it every time we step forward.

That would work if we wanted to repeat this logic forever–such as with the

refresh sequence. If we want to stop, though–such as at the end of the reset

sequence, then we’ll need recognize a last address and stop incrementing

r_addr once we get there. In the logic below, this last address is captured

by (&r_addr)–the address with every bit set. If we are not repeating,

we’ll stop at this address.

initial r_addr = 0;

always @(posedge i_clk)

if (i_reset)

r_addr <= 0;

else if (w_step && (OPT_REPEAT || !(&r_addr)))

r_addr <= r_addr + 1;The second of the three remaining steps is to read the command from our parameter set. Other than the initialization and reset logic, this is very straightforward.

initial r_cmd = INITIAL_COMMAND;

always @(posedge i_clk)

if (i_reset)

r_cmd <= INITIAL_COMMAND;

else if (w_step)

r_cmd <= getcommand(r_addr);Finally, the last step is to act upon the command. For this core, we’ll act

upon it by setting a number of bits in our output, o_cmd, based upon the

values captured by the command.

always @(posedge i_clk)

if (w_step)

o_cmd <= r_cmd[CMDWIDTH-1:0];Or, at least that’s the thought.

The problem is that we only want to set our output bits on a command opcode and not on a wait opcode. So we now need to check if either we aren’t implementing the wait opcode, or if the next command isn’t a wait command, before setting our output bits based upon the instruction.

always @(posedge i_clk)

if (w_step && (!OPT_DELAY || !r_cmd[WORDWID-1]))

o_cmd <= r_cmd[CMDWIDTH-1:0];But what should our initial and reset value be? Ideally, we’d want to set

o_cmd initially to whatever was in the first instruction and then

update it on every step following.

initial o_cmd = INITIAL_COMMAND[CMDWIDTH-1:0];

always @(posedge i_clk)

if (i_reset)

o_cmd <= INITIAL_COMMAND[CMDWIDTH-1:0];

else if (w_step && (!OPT_DELAY || !r_cmd[WORDWID-1]))

o_cmd <= r_cmd[CMDWIDTH-1:0];We’ve already discussed the getcommand function above, so we don’t

really need to discuss it more here.

function [WORDWID-1:0] getcommand;

input [LGNCMDS-1:0] i_addr;

getcommand = COMMANDS[i_addr * WORDWID +: WORDWID];

endfunctionVoila! That’s the logic to our ultra-micro controller: 1) calculate an instruction address, 2) read the instruction, and then 3) do what it tells you to.

Hopefully this logic appears quite straightforward. It’s supposed to be. If not, feel free to review the Verilog design yourself to see how it works. It’s supposed to be a very simple and reusable logic structure.

Formal Verification

That’s the basic logic associated with this simple ultra-micro controller, now let’s take a look at the formal properties necessary to force the induction engine into a consistent state.

There are two things to note before starting: First, this ultra-micro

controller

has only one input, i_reset. That’s it. That means we don’t need to make

any assumptions about how the various inputs might relate to each other.

|

Second, our basic approach will be to shadow the ultra-micro controller’s pipeline with a set of registers used only for formal verification–a packet of information if you will. This is very similar to the way riscv-formal works: you form a packet of information describing each instruction as it goes through the pipeline. In this case, however, we’ll draw our assertions about that packet as it works its way through the pipeline rather than just at the end as riscv-formal does.

I’m also going to use the abbreviations f_addr, f_cmd, and f_active to

describe the three different pipeline stages, while prior will be used to

describe any stage prior to active. Therefore, f_active_cmd will refer to

the command currently being carried out, whereas f_cmd_cmd will refer to the

command we are going to interpret next, and so forth. This will allow us to

assert, in a moment, that the output command bits are equal to either the low

order bits of f_active_cmd, or the low order bits of the prior command,

f_prior_cmd.

`ifdef FORMAL

// reg [LGNCMDS-1:0] f_addr, f_cmd, f_active;

reg [WORDWID-1:0] f_active_cmd, f_cmd_cmd, f_prior_cmd;

reg [LGNCMDS-1:0] fn_cmd, fn_active;

reg f_past_valid;Our first step will be to get a copy of the commands that should be executing in each of the various stages of processing–as outlined in Fig. 4 above.

always @(*)

f_active_cmd = getcommand(f_active);

always @(*)

f_cmd_cmd = getcommand(f_cmd);

always @(*)

f_prior_cmd = getcommand(f_active-1);

always @(*)

assert(r_cmd == getcommand(f_cmd));As discussed above, we’ll shadow the next command address with f_addr.

always @(*)

assert(f_addr == r_addr);Then, on each step, we’ll move push one command in and through the pipeline.

initial { f_active, f_cmd, f_addr } = 0;

always @(posedge i_clk)

if (i_reset)

{ f_active, f_cmd, f_addr } <= 0;

else if (w_step)

begin

if (OPT_REPEAT || !(&f_addr))

f_addr <= f_addr + 1;

{ f_active, f_cmd } <= { f_cmd, f_addr };

endOne of the hassles of dealing with addition wrapping in a finite number

of bits is that value + 1 uses one more bit than value rather than

wrapping. To make certain that adding one to a value causes the sum to

remain within the right number of bits, we need to force it back to the

bit-width of our address.

always @(*)

fn_cmd = f_cmd + 1;

always @(*)

fn_active = f_active + 1;Now that I have that, I can work through how the addresses should exist in relationship to each other. Let’s start with the case where the ultra-micro controller goes through its instructions in a one-shot (non-repeating) manner.

always @(*)

if (!OPT_REPEAT)

beginWe start out with everything at zero, so if f_addr is still at zero then

everything else must still be at zero.

if (f_addr == 0)

begin

assert(f_cmd == 0);

assert(f_active == 0);Otherwise, if the f_cmd stage is still at address zero, then we are one step

further–having incremented f_addr but not yet propagated that incremented

value to f_cmd.

end else if (f_cmd == 0)

begin

assert(f_active == 0);

assert(f_addr == 1);Now let’s turn to look at the other end. If f_active is all ones, then it’s

saturated at the last instruction as has everything before it.

end else if (&f_active)

begin

assert(&f_cmd);

assert(&f_addr);If f_active hasn’t yet saturated, but f_cmd has, then f_active will

saturate on the next clock. Of course, f_cmd can’t get to the last value

unless f_addr has already done so, so f_addr must be on the last address

as well. Finally, notice how we can check f_active+1 against f_cmd without

worrying about overflow, since f_cmd is already the maximum positive number

and f_active one less.

end else if (&f_cmd)

begin

assert(&f_addr);

assert(f_active + 1 == f_cmd);If none of those special cases are true, then we must be somewhere in the

middle: f_addr + 2 == f_cmd + 1 == f_active.

end else begin

assert(f_cmd + 1 == f_addr);

assert(f_active + 1 == f_cmd);

endThe case where we repeat is a touch more interesting. In this case, f_active

will remain equal to f_cmd for the first two initial clock cycles. In the

first cycle, f_addr == f_cmd == f_active == 0. In the second cycle,

f_addr == 1, while f_cmd == f_active == 0.

end else begin

if (f_active == f_cmd)

begin

assert(f_active == 0);

assert(f_addr <= 1);Once we get past those two first cycles, we then know that f_addr == f_cmd+1

and f_cmd == f_active + 1 with the exception that we have to math that will

wrap around after the last instruction. This was why we created fn_cmd and

fn_active, and then limited them to the bare width of any address.

end else begin

assert(fn_cmd == f_addr);

assert(fn_active == f_cmd);

end

endNow, what about our outputs? Realistically, that’s what we want to guarantee,

that we have the right outputs. Those are kept in o_cmd, and consist of the

bottom several bits of any command word.

Here’s where all of our address work pays off. Because we’ve set

f_active_cmd to be equal to the instruction at f_active, we can assert that

o_cmd matches the bits in f_active_cmd.

Well, not quite. What if the command in the last pipeline stage is a delay command? Okay, so as long as it’s not a delay command we can make this assertion.

always @(*)

if (!OPT_DELAY || !f_active_cmd[WORDWID-1])

assert(o_cmd == f_active_cmd[CMDWIDTH-1:0]);But what if it is a delay command? In that case, our outgoing command bits must match those of the prior command. As before, though, that’s only if the prior command isn’t also a delay. Since it’s not likely that we’ll ever want to place two delays in a row, this should be good enough.

else if ((f_active > 0)&&(!f_prior_cmd[WORDWID-1]))

assert(o_cmd == f_prior_cmd[CMDWIDTH-1:0]);There’s one other assertion we should make, and that is that the initial

command word is going to set o_cmd rather than be a delay instruction.

So let’s assert that this initial opcode is a set-bits op-code, that way

we’ll know that we always have a good set of output bits from the initial

state or the reset state forward.

always @(*)

assert(!INITIAL_COMMAND[WORDWID-1]);

`endif

endmoduleThe Reset Sequence

Before leaving this topic, let’s take a quick peek at how this ultra-micro controller gets used in an SDRAM controller. In particular, within this controller for the iCE CORE board, you have this piece of logic.

localparam [11:0] MODE_COMMAND =

{ 5'b00000, // Burst reads and writes

CAS_LATENCY[2:0],// CAS latency (3 clocks)

1'b0, // Sequential (not interleaved)

3'b001 // 32-bit burst length

};

genuctrl #(

.CMDWIDTH(1+4+1),

.LGDELAY(4),

.LGNCMDS(4),

.OPT_REPEAT(0),

.COMMANDS({

// Read these commands from the bottom up

{ 1'b0, 1'b0, CMD_NOOP, MODE_COMMAND[10] },

{ 1'b0, 1'b0, CMD_NOOP, MODE_COMMAND[10] },

{ 1'b0, 1'b0, CMD_NOOP, MODE_COMMAND[10] },

{ 1'b0, 1'b0, CMD_NOOP, MODE_COMMAND[10] },

//

// Need two cycles following SET_MODE before the first

// command

{ 1'b0, 1'b1, CMD_NOOP, MODE_COMMAND[10] },

{ 1'b0, 1'b1, CMD_SET_MODE, MODE_COMMAND[10] },

{ 1'b1, 2'b00, 4'h6 },

{ 1'b0, 1'b1, CMD_NOOP, MODE_COMMAND[10] },

{ 1'b0, 1'b1, CMD_REFRESH, MODE_COMMAND[10] },

{ 1'b1, 2'b00, 4'h6 },

{ 1'b0, 1'b1, CMD_NOOP, MODE_COMMAND[10] },

{ 1'b0, 1'b1, CMD_REFRESH, MODE_COMMAND[10] },

{ 1'b1, 2'b00, 4'h0 },

{ 1'b0, 1'b1, CMD_NOOP, MODE_COMMAND[10] },

{ 1'b0, 1'b1, CMD_PRECHARGE, 1'b1 },

// The command list starts here, with a no-op then precharge

{ 1'b0, 1'b1, CMD_NOOP, MODE_COMMAND[10] }

})

) reset_controller(i_clk, startup_hold,

{ reset_active, reset_command, reset_addr10 });The most difficult thing about reading this is that the commands start from low addresses and work their way up to higher addresses. The second challenging part is to realize that a command followed by a delay of zero issues the same command twice, and that a command followed by a delay of one issues it three times–so the actual number is the delay value plus two. Now, reading from the bottom up, we have:

- The first command is a NOOP,

CMD_NOOP. It last’s for one cycle. - The second command is a PRECHARGE command,

CMD_PRECHARGE. The final bit,1'b1, controls the eleventh address bit indicating that this command precharges all of the banks of the SDRAM. - We then return to a NOOP command.

- We maintain that NOOP command for a one cycle delay.

- Then we issue a REFRESH command,

CMD_REFRESH. - Set the outputs to a NOOP, and

- Wait

6+1cycles. (Total 8-cycles of NOOP) - Issue a second REFRESH command,

CMD_REFRESH. - Another NOOP

- Wait for

6+1cycles again - Issue a SETMODE command,

CMD_SET_MODE - Another NOOP

- Finally, that bit just more significant than the 4-bit SDRAM command gets released This is the flag indicating to the rest of the logic that the reset sequence is complete.

For more information on the meaning of these various commands, you should check out the data sheet for the ISSI SDRAM. In general, this sequence just follows directly from the one listed in the data sheet.

Notice also that all I’ve done is to script the startup script described in the SDRAM Spec directly into the design. Framing the problem in terms of this ultra-micro controller made it fairly easy to do.

That said, perhaps you noticed two confusing things.

-

The instructions read backwards. I may wish to change this in the future, but for now that’s how the controller works.

-

If you want to issue 7-cycles of NOOPs, you have to issue two commands. The first command switches the outgoing

reset_commandto aCMD_NOOP, and the second command waits. As built, this is not one command Worse, a wait cycle of ‘0’ will cause the controller to issue two NOOP’s, not zero and not one: one for the command, and another for the delay instruction.

The Refresh Sequence

The other sequence I used this ultra-micro controller for is the refresh sequence. In many ways, the refresh sequence is very similar to the reset sequence. The biggest difference is that the refresh sequence repeats.

Before diving into this sequence, let me just say that SDRAM refresh control can be much more complicated than what I’m presenting here. A “smart” SDRAM controller might issue more refreshes any time the chip is idle. That way the SDRAM doesn’t necessarily need to be interrupted when busy–or at least not nearly as often.

I’m not going to over think this today. In this case, we’ll use our ultra-micro controller to drive the refresh logic.

genuctrl #(

.CMDWIDTH(1+4+1),

.LGDELAY(LGREF),

.LGNCMDS(4),

.OPT_REPEAT(1),

.COMMANDS({

// Read these commands from the bottom up

{ 1'b0, REF_ZEROS, 2'b00, CMD_NOOP },

{ 1'b0, REF_ZEROS, 2'b00, CMD_NOOP },

{ 1'b0, REF_ZEROS, 2'b00, CMD_NOOP },

{ 1'b0, REF_ZEROS, 2'b00, CMD_NOOP },

{ 1'b0, REF_ZEROS, 2'b00, CMD_NOOP },

{ 1'b0, REF_ZEROS, 2'b00, CMD_NOOP },

//

{ 1'b1, CK_REFRESH_NOOP_TO_ACTIVATE },

{ 1'b0, REF_ZEROS, 2'b11, CMD_NOOP },

{ 1'b0, REF_ZEROS, 2'b11, CMD_REFRESH },

{ 1'b1, CK_PRECHARGE_TO_REFRESH },

{ 1'b0, REF_ZEROS, 2'b11, CMD_NOOP },

{ 1'b0, REF_ZEROS, 2'b11, CMD_PRECHARGE },

{ 1'b1, CK_WAIT_FOR_IDLE },

{ 1'b0, REF_ZEROS, 2'b01, CMD_NOOP },

{ 1'b1, CK_REMAINING },

{ 1'b0, REF_ZEROS, 2'b00, CMD_NOOP }

//

// ...

//

})

) refresh_controller(i_clk, reset_active,

{ refresh_active, refresh_stall, refresh_cmd });This implementation

is a little different: the refresh command is placed at the low order bits.

The next two most significant bits are a refresh stall, and a refresh active

bit. In this case, refresh_stall is an instruction to the bus controller

to stall any bus requests that might be coming into the controller. That allows

us to flush any active requests through the processing pipeline until the

refresh commands become active and start to override the memory control.

Let’s just scan through this command set briefly, skipping commands in our broad-brush through it:

- We start in reset. As long as the reset sequence is active, we hold the controller in reset.

- Once we come out of reset, we’ll issue a NOOP. Remember, this is also our

initial values that we set our results to during the reset cycle as well.

More importantly, we let the design run normally following the reset

without overriding any normal operation.

We’ll do this for

CK_REMAINING +2clock cycles. (The formula for this duration is beyond the scope of this article, but easily verifiable from within the core.) - When it’s finally time to start our refresh cycle, we’ll force the Wishbone

interface to stall. We then wait

CK_WAIT_FOR_IDLE+2cycles for any commands in process to flush through our interface and the bus to come to a halt. - Then we issue a PRECHARGE (all) command to close any active memory banks.

This takes

CK_PRECHARGE_TO_REFRESH+2clock cycles to complete, so we issue aCMD_NOOPduring these cycles. - We can finally issue the REFRESH command. However, we can’t immediately

go back to using the interface. Instead, we need to wait

CK_REFRESH_NOOP_TO_ACTIVATE+2cycles before the memory is (almost) ready to accept a command. - The next command clears any maintenance-induced bus stalls, and it also clears our refresh cycle allowing the rest of the memory control to return to bus control.

- Sadly, though, we need to go through all of the listed commands–even though we stopped early. Therefore, we issue idle NOOP’s until we reach the end of our list before starting over.

As with the reset sequence, most of this follows from the

ISSI SDRAM specification.

It’s timing driven, and specifically driven to issue one reset at a time

every N cycles as determined from the

specification.

Conclusion

While I’m not going to argue that this “ultra-micro controller” forms a general purpose computer, it does have many of the attributes of a more general purpose computer: It follows a pre-defined “program” or script of instructions. Each instruction has an “OpCode”. In this case, the “OpCode” was either to output a series of bits or to wait for a given count. Unlike more general purpose computers, this “ultra-micro controller” has no ability to add, subtract, test values, or branch. Still, it was good enough for today’s purposes to be reused in two different DRAM controllers in two different ways.

Not only that, this simple example makes a great illustration of how you can create a packet of information as instructions work their way through a CPU’s pipeline, and assert consistency with that pipeline along the way. This is a very useful pattern that you will likely see time and again when formally verifying pipelines.

And she said to the king, It was a true report that I heard in mine own land of thy acts and of thy wisdom. Howbeit I believed not the words, until I came, and mine eyes had seen it: and, behold, the half was not told me: thy wisdom and prosperity exceedeth the fame which I heard. (1King 10:6-7)