AXI Stream is broken

There, I said it. One of the simplest and most useful AXI protocols, AXI Stream, is fundamentally flawed.

Let’s quickly review AXI Stream, and then I’ll tell you what I mean by saying the protocol is broken. Then I’ll propose a method of fixing at least part of the problem.

What is AXI Stream?

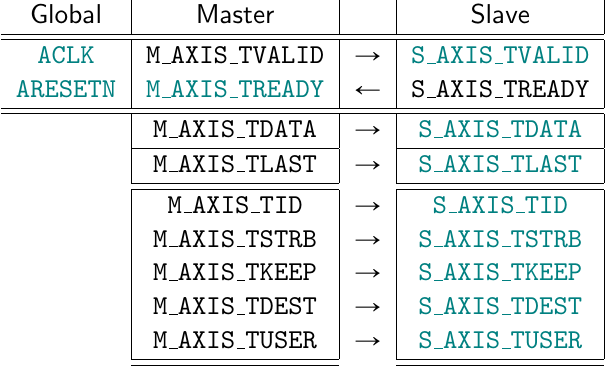

We’ve discussed AXI Streams a couple of times on this blog already, most recently when discussing the basic AXI handshaking rules. As a quick background, AXI Stream is a protocol that can be useful for transferring stream data around within a chip. Unlike memory data, there’s no address associated with stream data–it’s just a stream of information.

|

But how fast shall the stream run? As fast as it can be produced, or as

fast as it can be consumed? This is determined by a pair of values:

TVALID, indicating that the data in the stream is valid on the current clock

cycle and produced by the source (master), and TREADY, indicating that

the consumer (slave) is prepared to accept data. Whenever TVALID and

TREADY are both true, TDATA moves through the stream.

A third signal is also very useful, and that is TLAST. The TLAST signal

can be used to create packets of data, having beginning and ending.

The packet ends when TLAST is true, and begins on the first TVALID signal

following the TLAST signal.

The stream standard also defines such signals as TID (an ID for a packet),

TDEST (the packet’s destination), TSTRB (which bytes have valid

information), and TKEEP (which bytes in a stream cannot be removed), but I

have personally only rarely seen uses for these. The one exception is the

TUSER field which comes nicely into play in video streams–but I digress.

There’s one other important term you need to know:

backpressure.

Backpressure is what’s created when

the source is ready to send data, that is when TVALID is true, but the

consumer isn’t ready to receive it and so TREADY is false. Under the

AXI Stream protocol, there’s no limit

to how much backpressure

a slave may impose on any stream.

And herein lies my complaint.

What’s wrong with AXI Stream?

There are three basic types of stream components, and realistically AXI Stream only works for one of these three.

|

-

Sources. Most of the physical data sources I’ve come across produce data at a fixed and known rate. Examples of these sources include:

- Analog to Digital converters

- Network PHYs

- Digital cameras

In all three of three of these examples, data comes whether you are ready for it or not. What then happens if

TVALIDis true, from the source, but the processing stream isn’t ready to handle it? i.e.TREADYis low? Where does the data go?As an example, some of the filters we’ve created on this blog can only accept one data sample every

Nsamples (for some arbitraryN). What happens if you choose to feed the filter with data arriving at faster than one sample everyNclock cycles?Let’s call this the over-energetic source problem for lack of a better term, and it is a problem. In general, there is no way to detect an over-energetic source from within the protocol As a result, there is no protocol compliant way to handle this situation. Given a sufficient amount of backpressure, data will simply be dropped in a non-protocol compliant fashion. There’s really no other choice.

|

-

Processing components. These are neither sources nor sinks, and in today’s taxonomy, I shall arbitrarily declare that they have no real time requirement. In these cases, the AXI Stream protocol can be a really good fit.

A good example of such processing components would include data processing engines. Perhaps some seismic data was recorded a couple of years ago and you wish to process it in an FPGA today. In this case, there’s may be neither explicit latency nor throughput requirements. The data stream can move through the system as fast as the hardware algorithm will allow it.

-

Sinks. Here you have the second problem: most sinks need to interact with physical hardware at a known rate. Corollaries to the examples above form good examples here as well:

- Digital to analog converters

- Network PHYs

- Video monitors

What happens in these cases if it’s time to produce a sample,

TREADYis true to accept that sample, butTVALIDis not? That is, data is required but there’s no data to be had? We might call this the sluggish source problem for the sake of a discussion, although the condition itself is often called a buffer underrun.As with the first situation, there’s really no protocol compliant way of handling this situation.

Unlike the first situation, however, a sink can often detect this situation (!

TVALID & READY) and handle it in an application specific manner. For example, a DAC might replicate the last output value and raise an error flag. A network PHY might simply terminate the packet early–without any CRC, and so cause the receiver to drop the packet. A video stream might blank to black, and then wait for the next frame to resynchronize.

From this list, you can see that two of the three AXI Stream component types can’t handle the raw AXI Stream protocol very well. Of these two, the over-energetic source problem is the most problematic.

There’s another, more minor, problem with

AXI streams, and that is the problem

of recording any metadata associated with the stream. As an example, let’s

just consider the TLAST signal. What should an

AXI streams

processing system do with the TLAST signal when the data needs to be

recorded to memory? In general, it’s just dropped. In many cases, that

“just works”. For example, if you are recording video, where every video

frame has the same length, then you should be able to tell what packet you are

on by where the write pointer is in memory. On the other hand, if every

packet has a different size, then a different format is really needed.

These are the two problems I’ve come across when working with AXI streams which I would like to address today.

Motivating a Solution

Just to motivate a solution a little bit, I’m currently working on building a SONAR data capture design for a customer. This particular design will be for an underwater SONAR device, so physical access to the actual device will be limited. We’ve chosen instead to run all access over an Ethernet connection. On top of that, prior experience building designs like this has generated some reluctance in the team I’m working with to have a design depend upon a CPU. They would rather have a design that “just works” as soon as power is applied.

Fig. 4 shows a basic outline of this application, where data is collected from a number of sensors, serialized, compressed, organized into packets, converted to UDP, and then forwarded across a network data link.

|

My previous approach to network handling didn’t “just work” at the speeds I wanted to work at. In that case, the ARM CPU on the DE10-Nano couldn’t keep up with my data rate requirements. (Okay, part of the fault was the method I was using to transfer data to the CPU …) Lesson learned? Automated data processing, and in particular network packet handling that approaches the capacity of the network link, should be handled in the fabric, not in the CPU.

This meant that I needed to rearrange my approach to handling networks. I needed something automatic, something that didn’t require a CPU, and something that “just worked”.

I first looked at AXI stream, and then came face to face with the problem above: what happens if the stream is going to someplace where the buffer is full? What happens if there’s too much backpressure in the system to handle a new packet? What happens when an output packet is blocked by a collision with another outgoing packet, or when such a collision takes so long to resolve that there’s no longer any buffer space to hold the outgoing packet?

In network protocols, the typical answer to this problem is to drop packets. Unfortunately, the AXI stream protocol offers no native support for dropping packets.

Let’s fix that.

Proposing a solution: Packet aborts

At this point, I’m now well into the design of this system, and the solution

I chose was to add an ABORT signal to the

AXI stream protocol. The basic

idea behind this signal is that an AXI master should be able to cancel any

ongoing packets if the slave generates too much

backpressure (holds TREADY low

too long).

Here are the basic rules to this new ABORT signal:

-

ABORTmay be raised at any time–whether or notTVALIDorTREADYare true.ABORTmay also be raised for any cause. Backpressure is only one potential cause. Why? Think about it. AnABORTsignal caused by backpressure at one point in a processing chain may need to propagate forward to other portions of the chain that weren’t suffering backpressure. In other words, the protocol needs to allowABORT’s whether or not backpressure was currently present on a given link. -

Following an abort, the next sample from the AXI stream will always be the first sample of the next packet.

-

If

ABORT && !TVALID, then theABORTmay be dropped immediately. -

If

ABORT && TVALID, then theABORTsignal may not be dropped until afterABORT && TVALID && TREADY. -

Once

TVALID && TREADY && TLASThave been received, the source can no longer abort a packet.

The ABORT signal is very similar to a TUSER signal, but with a couple

subtle differences. First, TUSER is only valid if TVALID is also true,

whereas ABORT may be raised at any time. Second, an ABORT signal may be

raised while the channel is stalled with TVALID && !TREADY. This

would be a protocol violation if a TUSER signal was used to carry an ABORT

signal.

Still, that’s the basic protocol. For lack of a better name, I’ve chosen to call this the “AXI network” protocol or AXIN for short.

The DROP signal

I would be remiss if I didn’t mention

@JoshTyler’s prior

work

before going any further. @JoshTyler

chose to use a DROP signal, similar to my ABORT signal proposed above.

Whenever his Packet

FIFO

encounters a DROP signal, it simply drops the entire packet–much like

the ABORT signal I’ve proposed above would do.

There are a couple of subtle differences, however, between his DROP signal and

my proposed ABORT signal. The biggest difference between the two is that, in

the AXIN protocol, the ABORT signal may arrive without an accompanied

TVALID. ABORT may also be raised even if the stream is stalled with

TVALID && !TREADY. From this standpoint, it’s more of an out of band signal

whereas @JoshTyler’s DROP signal is in band.

Both approaches are designed to solve this problem, and both could work nicely for this purpose. In general, @JoshTyler’s approach looks like it was easier to implement. However, it also looks like his implementation might hang if ever given a packet larger than the size of his FIFO.

Measuring success: does it work?

Of course, the first question should always be, does my proposed AXIN protocol work? At this point in the development of my own design, I can answer that with a resounding yes! Not only does this technique work, it works quite well.

Allow me to share some of my experiences working with this protocol, starting from the beginning.

|

- As with most of the protocols I’ve come to work with, my first step was to build a set of formal properties to define the AXIN protocol. These matched the modified AXI stream properties outlined above.

That was the easy part.

|

-

The next piece I built was a basic

ABORTenabled FIFO, shown in Fig. 6 on the left. This FIFO had two AXIN ports: an AXIN slave port and an AXIN master port. The simple goal of the FIFO was to provide some buffer room between a packet source and a destination.Here I came across the first problem with the protocol: the FIFO turned out to be a real challenge to build. (Josh’s implementation looks easy in comparison.) What happens, for example, if two packets are in a FIFO and the third packet aborts? The first two packets, which now have allocated memory within the FIFO must go through. What happens if the FIFO is completely full with less than a packet and the source aborts? The FIFO output must abort as well, and the FIFO should then flush the incoming packet until its end–even though the source will know nothing of the packet getting dropped. All of these new conditions made this AXIN protocol a challenge to build a design for. Today, however, the task is now done and the FIFO works.

Once I had a FIFO in place, things started getting easier. I then built an AXIN payload to UDP converter, an AXIN multiplexer to select from multiple packet sources, an AXIN broadcast element to broadcast packets to multiple separate packet handlers, and more. With each new component, the protocol became easier and easier to use.

Two recent examples might help illustrate the utility of this approach.

-

First, on startup, the network PHY is given a long reset. (It might be too long …) During this time, however, packets are still generated–they just need to be dropped internally because the network controller is holding the PHY in reset. Sure enough, the FIFO that I had put so much time and energy into worked like a champ! It dropped these packets, and recovered immediately once the controller brought the PHY out of reset and started accepting packets. Even better, I haven’t found any bugs in it since the formal verification work I went through.

-

The second example I have is one of data packet generation. As you may recall, one of the primary design goals was to broadcasting data packets, each containing an internal time stamp. The problem is that this data packet includes in its header a time stamp associated with the data–something not known until the data is available. In other words, the header cannot be formed until the both the data and its associated time stamp are available. Once that first data sample is available, though, the data must now wait for the header to be formed and forwarded downstream. The problem then replicates itself again in the next processing stage: the payload to UDP packet converter.

My first approach to data packet generation was to try and schedule the packet header generation between the time when the data entered the data compression pipeline stage and when it came out to go into the payload generator (See Fig. 4 above), but then I came across a simulation trace in which the last data sample of one packet was immediately followed by the first data sample of the next packet. That left no time cycles for header generation between the last data sample of the one packet and the first sample of the next. When I went back to the drawing board to look for another solution, I found my AXIN FIFO. Placing this FIFO between the data source and the packet generator solved this problem nicely, and I haven’t had a reason to look back since.

Indeed, the ease of building these subsequent data handling components has easily repaid the time I have put into building the original FIFO a couple times over.

No, this new protocol isn’t perfect. I still needed a way to transition from a packet that can be dropped to one that has been fully accepted. In particular, I wanted to generate a DMA engine that could store a packet into memory. Indeed, this brings up the next problem: how shall packet boundaries be preserved once the packet is stored in memory?

I’ve seen two solutions to this problem. One solution is to record TLAST

along with the packet data, so the boundary can be rediscovered at a later

time. This works, as long as you have room for the TLAST bit. A second

solution I’ve found in a vendor design is to capture TLAST data in a

separate memory. This works until you discover one memory becoming full

before the other one. Indeed, I seem to recall a bug report having to do

with the TLAST buffer suffering from an overrun while the TDATA buffer

still had plenty of room in it …

But I digress.

Just as a quick reminder, most data buses have a power of two width. That

means they are 8b, 16b, 32b, 64b, 128b, etc. wide. At this width, there’s no

room for an extra bit of information to hold the TLAST bit indicating the end

of a packet once it has been placed into memory. I wanted another solution

to this problem therefore.

|

-

This leads to the third main component I built: A bridge from AXIN to a proper AXI stream with no

TLAST. I then embed into this stream, in the first 32’bit word of packet data, the length of the packet in bytes. Then, once converted to this format, basic AXI stream tasks become easy again, since I could now use regular AXI stream components. Similarly, I could now write packets to memory and maintain the packet boundaries at the same time.As with the FIFO, however, this component was a real challenge to build. Just so we are clear, the challenge wasn’t so much the challenge of writing Verilog to handle the task. That part was easy. The real challenge was writing Verilog that would work under all conditions. Sure enough, the formal solver kept finding condition after condition that my AXIN to stream bridge wasn’t handling properly. For example, what should happen if the incoming packet is longer than the slave’s packet buffer? The slave should drop the packet–not the master. All of these and more had to go into this protocol converter.

One reality of my current version of the bridge, however, is that it limits the size of a packet to what can be stored within the block RAM found within the bridge. Given time, I’ll probably remove this limitation by adding an external memory interface–but that’s a project still to come.

However, being able to capture a full packet in block RAM has the advantage of guaranteeing that any locally generated packets can be guaranteed to be complete packets before they are sent to the PHY.

Once the whole packet processing chain was put together, it looked roughly like Fig. 8 below.

|

First, the packet would be formed by grouping raw data samples together with a time stamp and a frame number. In general, this involved a series of stages, almost all with small FIFOs, as various headers were applied. The result was typically an Ethernet packet encapsulating an IPv4 packet encapsulating a UDP packet with an application specific payload. This generated packet had the basic structure shown in Fig. 9.

|

Going back to Fig. 8, this packet was then buffered, converted to

AXI stream proper (without TLAST), and then sent through an

asynchronous FIFO. On the

other side of the FIFO, the packet was converted back to the AXIN

protocol, albeit with the ABORT signal now held low, and a single packet

source was selected to feed the network controller. Because every packet

source had its own buffer, and because the asynchronous

FIFO operated on

32-bit data using a clock speed that was faster than one quarter the network

speed, there was never a chance that the network controller would run dry

prior between starting and completing a packet.

Further, in spite of all the resources used by this approach, the Artix A7-200T I am using still has plenty of LUTs and LUT RAMs (over 80%+) remaining for a lot more design work.

Perhaps the proverb that describes this work the best is, “In all labour there is profit,” since my original work to generate the formal AXIN properties, the following network FIFO, and then AXIN to stream converter, has now all been repaid in spades via reuse. (Prov 14:23)

Conclusions

AXI stream remains a simple and easy to use protocol. Indeed, it is ideal for any application where you can guarantee your design will match any needed rates before using it. Yes, backpressure is a great feature, but it is also important to recognize that all systems have a limit of how much backpressure they can handle before breaking any real time requirements. Once the backpressure limit of any design has been reached, some AXI stream sources may need to be able to control what happens next. Others simply need to be able to flag the existence of the data loss. Either way, apart from careful extra-protocol engineering and rate management, data loss becomes inevitable.

So, just how should dropped data be handled in your application?

In the case of network packet data that I’ve been working with, the easy

answer is to drop a whole packet whenever on-chip packet congestion becomes too

much to deal with. The ABORT signal has worked nicely for this purpose, in

spite of the initial challenges I had in working with it.

In many ways, this only pushes the problem up a level in the stack. In my case, that’s okay: Most network protocols are robust enough to be able to handle some amount of packet loss. Packet loss can be detected via packet frame counters, time stamps or both. Packet loss can also be detected in client-server relationships whenever a reply to a given request is not received in a timely manner. In those cases, requests can be retried or eventually abandoned. This is all quite doable, and doing it in a controlled manner is key to success.

Indeed, this technique has worked so well that I’ve started applying something similar to video streams as well. Have you noticed, for example, that many video IPs are very sensitive to which IPs get reset first? That, however, may need to remain a topic for another day.

Why do the heathen rage, and the people imagine a vain thing? (Ps 2:1)